Intro

Multi-touch screen have been developed over the last decades providing a more convenient way for human-computer interaction. In recent years, touch gesture based applications have been growing fastly due to the combination of the smartphone, tablet, and multi-touch screen.

This article aims to briefly explain the technology, architecture, principles of today’s multi-touch screens and the driver software for gesture recognition in smart phones.

Categories of Touch Gestures

Depending on the different interaction contexts, touch gesture varies from shape, number of strokes and number of simultaneous touches. The definitions of term “stroke” and “touch number” are given as following

- stroke: the trajectory of a touch

- touch number: number of concurrent contacts involved

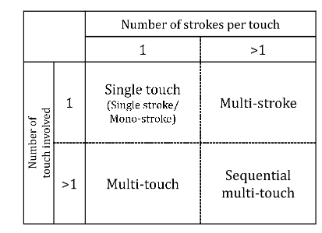

Based on the number of strokes and number of touch numbers, we can summarize different type of gestures as

For instance, we list some interactions of each gesture as following

- Single-touch: direct manipulation and indirect commands

- Multi-stroke: handwriting input

- Multi-touch: scrolling on a map application

- Sequential multi-touch: indirect commands

Gesture Recognition

Single-touch Gesture

As the simplest gesture, single touch gesture is commonly used in interaction systems for both direct manipulation and indirect commands.

While used as direct manipulation, single touch gesture is mostly used for moving a selected virtual element. System need to measure the touch’s movement direction, speed and displacement to give a corresponding movement for the virtual element. Most of these movement information can be obtained directly from the underlying hardware and does not require complex recognition processing.

Basically, there are two types of recognition strategies for single touch gesture:

- sequence matching

- statistical recognition based on global feature

The state-of-art sequence matching algorithm is named $1 recognizer, which resamples each stroke into a fixed number of points. Then a candidate C is compared to each stored template $T_i$ to find the average distance between corresponding points using a point-to-point matching equation.

where $k$ is the point index for each symbol, $x$, $y$ is the coordinate of each point. The template $T_i$ with the least path-distance to $C$ is the result of the recognition.

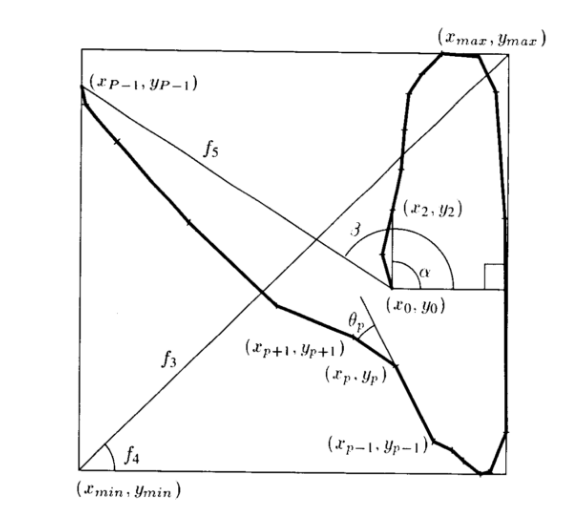

For the statistical recognition based on global feature, the typical set is the Rubine’s global feature set, which has been widely used for recognizing single stroke gestures. It employees 13 global features which are computed from a complete gesture shape. Following figure shows a part of features used by Rubine, where $f_3$ and $f_4$ are the length and angle of the bounding box diagonal, $f_5$ is the distance between the first and the last point. The full 13 features can be found.

Multi-stroke Gesture

The state-of-art multi-stroke gesture recognition techniques can be categorized into three different approaches,

- Trajectory based

- Structure based

- Feature based

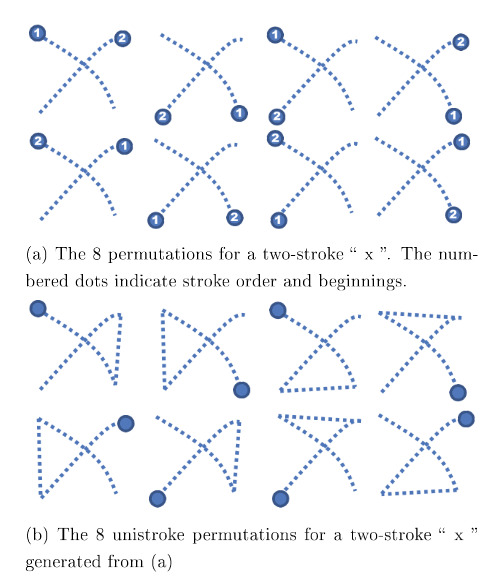

The trajectory based gesture recognitions can be conducted with two different strategies. The first strategy is to transform a multi-stroke gesture into multiple single strokes according to the start point and end point of the trajectory, and after transformation each single strokes can be further recognized using sequence matching or global featuring. Another strategy is called $N recognizer, which is a significant extension to the $1 unistroke recognizer introduced in sequence matching. The main idea of $N recognizer is to permute all component strokes. Each permutation represents one possible combination of stroke order and direction. There are $2^N$ combinations for $N$ strokes. Shown as following, 8 possible permutation of a two-stroke “x” is shown. The permutations of possible combinations are then converted into unistroke by simply connecting the endpoints of component strokes and stored in template set for comparison. At runtime, each candidate multi-stook gesture is also connected in the draw order to form a unistroke and compared to all unistroke permutation templates using the $1 algorithm.

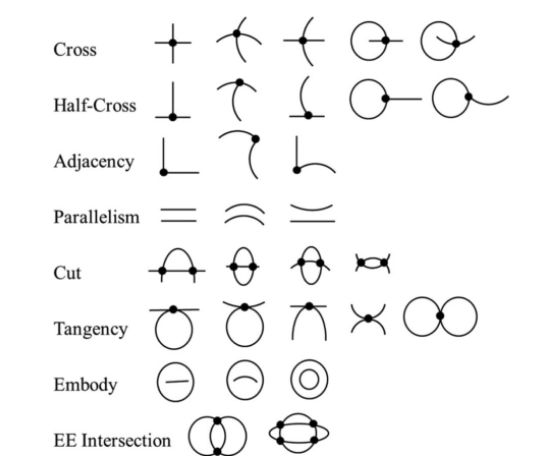

Instead of transforming the multi-stroke into a single sequence,the structure based methods focus more on each individual stroke and the inner relations between each two strokes. The main idea of structure based methods is to analysis the geometric relations between each two strokes and represent a symbol as a semantic network of strokes with their relations. In 2015, a group of researchers presented a typical state-of-the-art structure based sketch recognition method which exploits topology relations and graph representation for strokes. The noised raw input of handwritten sketches is firstly refined into sub-strokes using polygonal approximation, agglomerate points filtering, endpoints refinement and convex hull calculation, and then fitted to a few primitive shapes.

There are many ways to define the relations for each pair of primitives. Mas et. al present an approach to generate a set of adjacency grammars based on five relations (parallel, perpendicular, incident, adjacent, intersects). This set determines the final grammatical ruleset to characterize a symbol. Following figure shows the examples of topology relations between primitives

Extracting features to train and feed a statistical classifier, such as kNN, neural network, SVM, is a more popular solution in pattern recognition. In this way, multi-stroke gesture recognition is very similar to isolated character recognition, where characters can be seen as isolated multi-stroke symbols, where the basic features are inherited from Rubine’s single touch gesture. A tendency is concerned to induce more and more complex feature based on both static (stroke number, convex hull, area) and dynamic (average direction, velocity, curvature) information.

Multi-touch Gesture

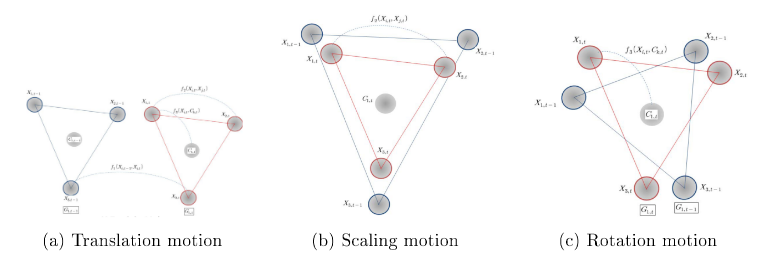

A common sense for the usage of multi-touch gesture is to directly manipulate a virtual element on the touch interface. Usually a direct manipulation based system has limited gesture vocabulary such as click for selection, drag for moving, pinch for zoom, etc. The recognition for direct manipulation is achieved by analyzing the spatial displacement of fingers over time. For instance. following recognition allow three motions for multi-touch direct manipulation: translation, scaling and rotation, according to the displacement of touch points.

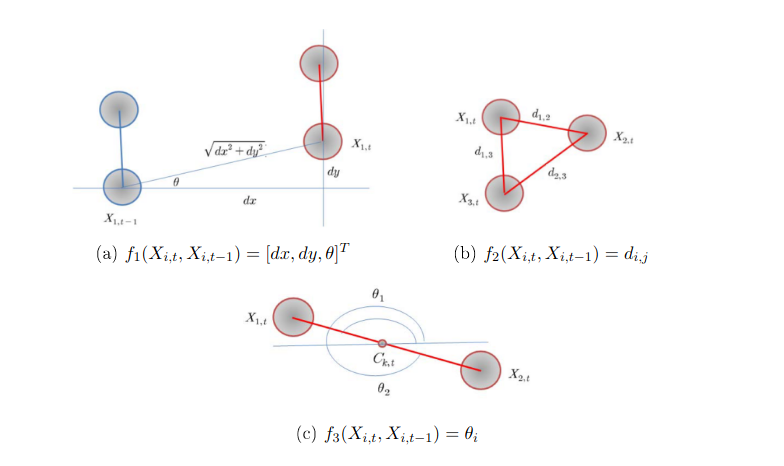

In order to detect the user’s intention from the trajectories, they define three motion parameters, respectively related to the three motions, that calculate the displacement of touch points between each time $t-1$ to $t$. The definition of three motion parameters are show in following figure. The system triggers the corresponding motion operation when the variation of any motion parameter is larger than a certain threshold. Another definition of distance function (swipe, rotate, and zoom) is also shown as following figure, that the motion feature functions: $f_1$ measures the translation vecotr of $i^{th}$ point pair between time $t-1$ and $t$. $f_2$ measures the distance $d_{i,j}$ between points in a certain time. $f_3$ measures the rotation angle $\theta_i$ of $i^{th}$ node between time $t-1$ and $t$.

References

[1] Michael Schmidt and Gerhard Weber. Template based classification of multi-touch gestures, Pattern Recogn., 46(9):2487 2496, September 2013.

[2] Jacob O. Wobbrock, Andrew D. Wilson, and Yang Li. Gestures without libraries, toolkits or training: A $1 recognizer for user interface prototypes. In Proceddings of the 20th Annual ACM Symposium on User Interface Software and Technology, 2007, ACM.

[3] Shuang Liang, Jun Luo, Wenyin Liu, and Yichen Wei. Sketch matching on topology product graph. In IEEE Trans Pattern Anal. Mach. Intell., 2015.

[4] Chi-Min Oh, Md Zahidul Islam, and Chil-Woo Lee. Mrf-based particle filters for multi-touch tracking and gesture likelihoods. In 11th IEEE International Conference on Computer and Information Technology, 2011.

[5] Kai Ding, Zhibin Liu, Lianwen Jin, and Xinghua Zhu. A comparative study of gabor feature and gradient feature for handwritten chinese character recognition. In 2007 International Conference on Wavelet Analysis and Pattern Recognition, 2007.

[6] Zhaoxin Chen. Recognition and interpretation of multi-touch gesture interaction. Human Computer Interaction. INSA de Rennes, 2017.